As we navigate the final chapters of late-stage capitalism, a new specter looms large over our cultural landscape: Artificial Intelligence (AI) generated content. While often promoted as a triumph of technological progress, what lies beneath is a sinister ghost that deepens the commodification of creativity and reinforces the dominance of market-driven aesthetics. The proliferation of AI slop is undeniable, shoveled into advertisements and films, tricking our grandparents on Facebook, and stealing work from humans.

Scott Draves, Electric Sheep, 1999

When AI artwork first presented itself, it birthed a genuinely new aesthetic full of blobby faces and new forms forged from the six-fingered hands of computers. In 2015, Google engineer Alexander Mordvintsev developed DeepDream, a computer vision program that could be called the beginning of AI art as we know it today. It used a neural network (and a lot of code) to enhance and modify images in a process called “pareidolia,” where the network over-interpreted and amplified patterns it detected in images, often creating hallucinogenic and surreal visual effects. *

Initially designed to visualize and understand how neural networks operate, DeepDream gained popularity for its artistic potential and instantaneous nightmare fuel. It became widely known due to its ability to transform ordinary photographs into complex and abstract works of art, sparking interest not only in the tech community but also among artists, designers, and the general public.

Initially designed to visualize and understand how neural networks operate, DeepDream gained popularity for its artistic potential and instantaneous nightmare fuel. It became widely known due to its ability to transform ordinary photographs into complex and abstract works of art, sparking interest not only in the tech community but also among artists, designers, and the general public.

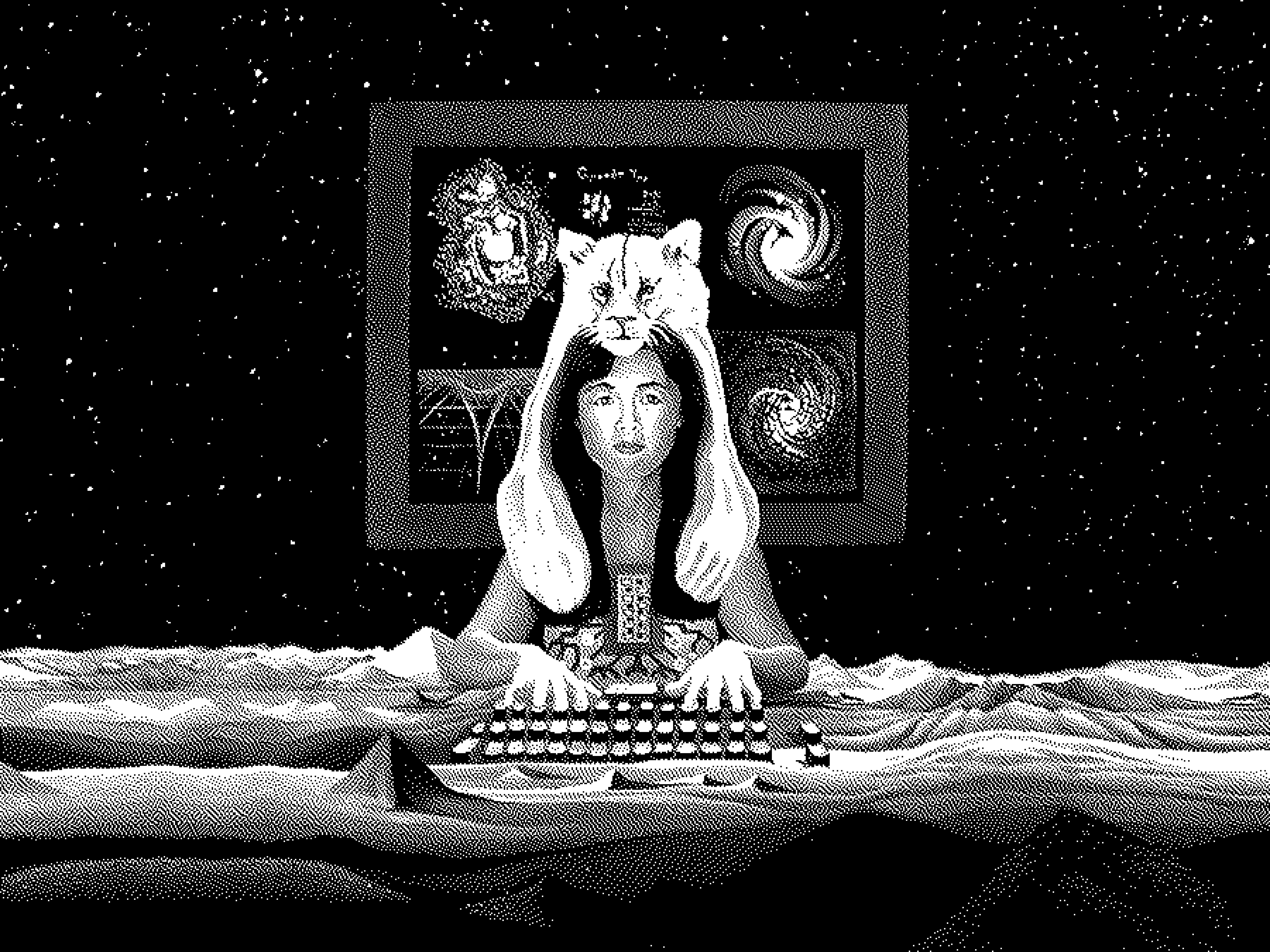

Lynn Randolph, Cyborg, 1989

The use of machines to generate art dates back to Daedalus, and in the digital age, generative artworks such as Scott Draves Electric Sheep screensaver in 1999 and the work of Karl Sims in the 1980s and 1990s, laid the foundation for this work, despite looking far more abstract than what we now consider to be AI art. Building on DeepDream’s development, the Paris-based art collective Obvious created Edmond de Belamy* in 2018, a fictional portrait generated by a Generative Adversarial Network (GAN), a training model of sorts that creates new content (in this case, images) by finding connections from large data sets. It became famous for being the first AI-created piece auctioned at Christie’s, where it sold for a comically overpriced $432,500 in 2018 (fetching 43 times its estimate).1 What differentiated this piece from previous AI art is that it was completely generative yet highly referential to artworks that came before it. It was a smudgy simulacrum of a painting that looked a hundred years old, with the artist’s intent replaced by a cold, hard machine. The piece itself was subjectively ugly, but Obvious was clever enough to use it as a conversational lightning rod, all while making a quick buck off the easily gamified and financially craven contemporary art market.

Obvious, Edmond de Belamy, 2018

Today, AI art generators are a dime a dozen, with every major tech company releasing or acquiring its own to showcase to the public (Midjourney, DALL-E, and Stable Diffusion are among the major players). Even Photoshop pushes its own text-to-image AI image generator and forces countless new AI features every time you open the program. There are several obvious issues with these text-to-image generators (terrible for the environment, trained on copyrighted works, reductive and unoriginal), but the heart of the issue is that no real artist ever asked for this. Anyone with an elementary knowledge of aesthetic theory or who has found joy in putting pen to paper understands that the creation of art is not something that can be replicated, nor is it something that artists want to be replicated. I want AI to do the things I don’t want to do—sending invoices, for example, although I would not trust it to do that successfully—not the things I enjoy doing most, like creating images. Only the transactional tenacity of the tech world could think that the art of aesthetics is something that could easily be multiplied and automated.

AI has not spared graphic design either. There are numerous AI-powered logo generators, UI generators, and even font generators. Any criticism directed at AI-generated art also applies to graphic design, as these tools produce pale, cheap imitations of the real thing and should be avoided by clients and used deftly and sparingly by designers. I’ve even seen AI-generated art used in presentation decks by non-image makers to visualize their ideas, a sad and scary reality for all working graphic designers. While this newfound act of visual creativity may feel exciting for them, it is an insult to those who shape aesthetics and a flawed, inadequate way to convey ideas. AI, in its early days, struggled most with typography—the visual foundation of language—as a true understanding of letterforms can require a lifetime of study. It frequently fails to make any meaningful statement, visually or otherwise, so it’s no surprise that the visual representation of words is where it tends to falter most.

That’s not to say that AI-generated art doesn’t have its place. Shunning a certain technology just because of its negative effects is a fool’s errand, as to understand something, you must be able to grapple with it. Those who say, “it’s so over” while posting the ugliest image ever imagined by AI clearly don’t know good art. And those who ban the use of AI-generated images are shutting themselves off from the first genuinely new technological aesthetic since the advent of the computer in the early 1980s. I have used AI countless times in sketches, collages, and certain parts of final designs, but in each of these instances, I made sure the human element was present because, without that, images are meaningless. My first reaction when I realize an image has been made by AI is simply, “This was made with AI,” and that kills all other feelings I may have otherwise had about the image. AI on its own is fantastic nightmare fuel and a goldmine of memes; just don’t use it to replace a real artist. Any graphic designer or artist with enough technical skills and cultural cachet will do better than a robot, and anyone who doesn’t understand that should not be in the position to hire artists or influence those who do.

AI has not spared graphic design either. There are numerous AI-powered logo generators, UI generators, and even font generators. Any criticism directed at AI-generated art also applies to graphic design, as these tools produce pale, cheap imitations of the real thing and should be avoided by clients and used deftly and sparingly by designers. I’ve even seen AI-generated art used in presentation decks by non-image makers to visualize their ideas, a sad and scary reality for all working graphic designers. While this newfound act of visual creativity may feel exciting for them, it is an insult to those who shape aesthetics and a flawed, inadequate way to convey ideas. AI, in its early days, struggled most with typography—the visual foundation of language—as a true understanding of letterforms can require a lifetime of study. It frequently fails to make any meaningful statement, visually or otherwise, so it’s no surprise that the visual representation of words is where it tends to falter most.

That’s not to say that AI-generated art doesn’t have its place. Shunning a certain technology just because of its negative effects is a fool’s errand, as to understand something, you must be able to grapple with it. Those who say, “it’s so over” while posting the ugliest image ever imagined by AI clearly don’t know good art. And those who ban the use of AI-generated images are shutting themselves off from the first genuinely new technological aesthetic since the advent of the computer in the early 1980s. I have used AI countless times in sketches, collages, and certain parts of final designs, but in each of these instances, I made sure the human element was present because, without that, images are meaningless. My first reaction when I realize an image has been made by AI is simply, “This was made with AI,” and that kills all other feelings I may have otherwise had about the image. AI on its own is fantastic nightmare fuel and a goldmine of memes; just don’t use it to replace a real artist. Any graphic designer or artist with enough technical skills and cultural cachet will do better than a robot, and anyone who doesn’t understand that should not be in the position to hire artists or influence those who do.

Kevan J. Atteberry, Clippit, 1997

As we drift further down the hazy river of late capitalism, AI-generated content emerges from the smoke as a perfect symbol representing everything wrong with today’s culture: fast, convenient, ugly, and empty. It only makes sense that it has become the official aesthetic of America’s fascist downward turn, or as technology writer Charlie Warzel puts it, “The MAGA Aesthetic Is AI Slop.”2 It serves as an attempt to replace all that is holy to image makers with the schlock of the new. As it further penetrates its blobby faces and six-fingered hands into the everyday, it is important to push back and define it as a false idol against true art and design. While it still can be wielded deftly by those who understand it, it should never be used as a total replacement for a human being by any fool who has never put in the time or effort to contribute to the art of visual creation. It is only through this lens that we can see AI art for what it is—an enticing but empty spectacle that holds up a digital mirage that mirrors our culture’s surrender to profit over meaning.

* More so than not, dogs appeared where they shouldn’t because the model was trained on a subset of the ImageNet database released in 2012 that contained “fine-grained classification of 120 dog sub-classes.”

* The name “Belamy” was a pun based on Ian Goodfellow, a Google engineer credited with inventing GANs.

* More so than not, dogs appeared where they shouldn’t because the model was trained on a subset of the ImageNet database released in 2012 that contained “fine-grained classification of 120 dog sub-classes.”

* The name “Belamy” was a pun based on Ian Goodfellow, a Google engineer credited with inventing GANs.